If you’re working with language models and have limited data, you might wonder how to make them better for your specific needs. Fine-tuning large language models (LLMs) on small datasets can help achieve that without needing huge resources. This guide walks you through the process in simple terms, focusing on practical steps anyone can follow. Whether you’re a beginner or have some experience, you’ll find useful tips here to get started.

In this post, we’ll cover the basics of what fine-tuning means, why it’s useful even with small data, and a clear path to do it yourself. By the end, you’ll have the knowledge to adapt models like GPT for your own projects. Let’s get into it.

What is Fine-Tuning in LLMs?

Fine-tuning is a way to adjust a pre-trained language model to perform better on a specific task. LLMs like GPT or Llama come already trained on massive amounts of text from the internet. But they might not handle your unique data well right away. Fine-tuning lets you teach the model using your own examples, making it more accurate for things like customer support chats or content generation in a narrow field.

Think of it like taking a general-purpose tool and sharpening it for a particular job. The model learns patterns from your data, improving its responses without starting from scratch.

Why Fine-Tune on Small Datasets?

Many people think you need thousands of examples to fine-tune effectively, but that’s not always true. With small datasets—say, a few hundred samples—you can still see big improvements. This is great for small businesses or hobbyists who don’t have access to big data collections.

One key reason to fine-tune on small datasets is cost savings. Training from zero takes a lot of computing power, but fine-tuning builds on what’s already there. It uses less time and money, making it a cheap fine tuning guide for those on a budget.

Another benefit is customization. If your work involves niche topics, like rare languages or industry-specific terms, fine-tuning helps the model understand them better. For instance, you might fine tune GPT for niche data, such as medical notes or legal documents, where general models fall short.

Research shows that even with 100-500 examples, models can adapt well if the data is high quality. Techniques like parameter-efficient fine-tuning (PEFT) make this possible by updating only a small part of the model, keeping things efficient.

Benefits of Fine-Tuning on Small Data

Fine-tuning on small datasets offers several advantages that make it worth trying. First, it boosts performance. A general LLM might give okay answers, but after fine-tuning, it can match your style or handle specific questions more accurately. Tests often show accuracy jumps of 10-20% on targeted tasks.

Second, it’s resource-friendly. You don’t need powerful servers; cloud services let you do this on basic setups. This ties into being a cheap fine tuning guide, as many tools have free tiers or low-cost options.

Third, it protects privacy. If your data is sensitive, fine-tuning keeps it local or on secure platforms, unlike sending everything to an API.

Finally, it speeds up development. Instead of building a model from the ground up, you tweak an existing one. This means faster results for prototypes or small projects.

In short, fine-tuning on small data democratizes AI, letting more people create custom solutions without big investments.

Preparing Your Dataset

Before you start fine-tuning, your data needs to be ready. This step is crucial because poor data leads to poor results. Focus on quality over quantity here.

Collecting Data

Start by gathering relevant examples. For a small dataset, aim for 200-1000 samples. These could be question-answer pairs, text completions, or conversations.

Sources might include your own records, like email threads or user queries. If you need more, use public datasets but filter them to fit your needs. Tools like Hugging Face Datasets can help find starter sets.

Remember, diversity matters. Include variations in wording or context to help the model generalize.

Cleaning and Formatting

Once collected, clean the data. Remove duplicates, fix spelling errors, and strip out irrelevant parts like ads or boilerplate text.

Format it consistently. Most fine-tuning setups expect JSON or CSV files. For example, each entry might have an “input” field for the prompt and an “output” for the expected response.

Check for balance—if your task involves categories, ensure even representation to avoid bias.

Tools like Python’s pandas library can automate much of this. Spend time here; it pays off in better model performance.

Choosing the Right LLM

Not all LLMs are equal for fine-tuning on small datasets. Pick one based on size, capabilities, and ease of use.

Smaller models like DistilBERT or Phi-2 are great starters. They’re lighter on resources and fine-tune quickly.

For more power, consider open-source options like Llama 2 or Mistral. They’re free and perform well with limited data.

If you want to fine tune GPT for niche data, use models from OpenAI’s family, like GPT-3.5-turbo. They have API support for fine-tuning, though it might cost a bit.

Factor in your hardware. If running locally, check GPU requirements. Cloud platforms like Google Colab offer free access to decent setups.

Evaluate based on benchmarks—look at how similar models perform on tasks like yours.

Tools and Platforms for Fine-Tuning

You don’t need to code everything from scratch. Several tools make fine-tuning straightforward.

Hugging Face Transformers is popular. It’s free, open-source, and has libraries for Python. You can load models, prepare data, and train with a few lines of code.

For a no-code approach, try platforms like Replicate or Together AI. They handle the heavy lifting and often have affordable plans.

OpenAI’s fine-tuning API is user-friendly if you’re in their ecosystem. It’s a good fit if you want to fine tune GPT for niche data.

For cheap options, look at Google Colab or Kaggle notebooks. They provide free GPUs for short sessions, aligning with this cheap fine tuning guide.

Other tools include LoRA adapters for efficient tuning, reducing compute needs.

Choose based on your skill level—beginners might prefer APIs, while coders opt for libraries.

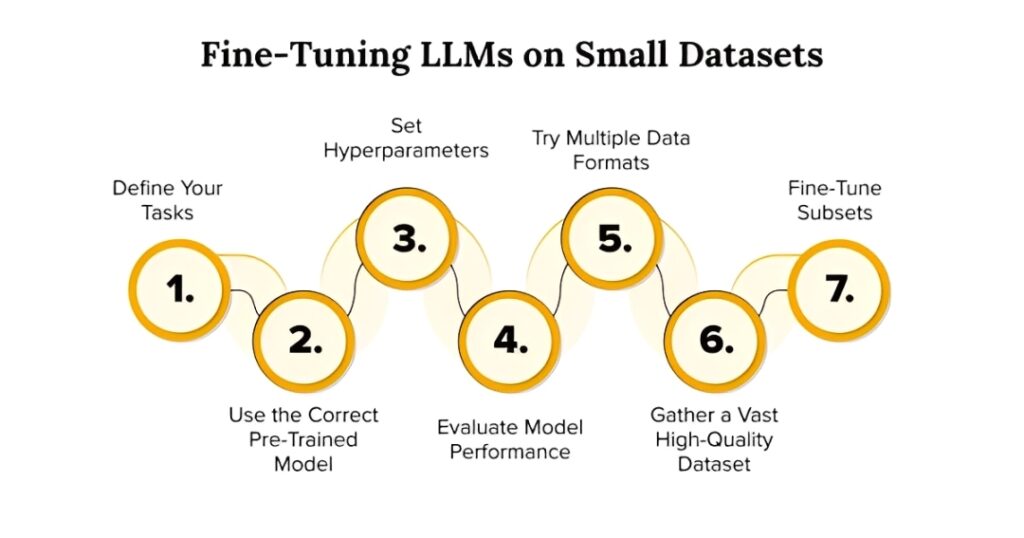

Step-by-Step Guide to Fine-Tuning

Now, the core part: a hands-on guide. This fine tune llm small dataset tutorial breaks it down into clear steps. We’ll use Hugging Face as an example, but principles apply elsewhere.

Step 1: Set Up Your Environment

Install necessary libraries. In Python, run pip install transformers datasets torch.

Sign up for Hugging Face if needed—it’s free.

Prepare your hardware. Use a GPU if possible; Colab is great for this.

Step 2: Load the Base Model

Choose a model from Hugging Face Hub, like “gpt2” for simplicity.

Use the AutoModelForCausalLM class to load it.

This sets the foundation for your fine-tuning.

Step 3: Prepare the Dataset

Load your cleaned data into a Dataset object.

Split it into train and test sets—80/20 is common.

Tokenize the text using the model’s tokenizer. This converts words to numbers the model understands.

Handle padding and truncation to keep inputs uniform.

Step 4: Configure Training Parameters

Set hyperparameters: learning rate (around 5e-5), batch size (4-16 depending on GPU), epochs (3-5 for small data).

Use PEFT methods like LoRA to save resources. This updates fewer parameters, making it efficient for small datasets.

Step 5: Train the Model

Run the training loop. In Hugging Face, the Trainer class handles this.

Monitor loss metrics to see improvement.

It might take 30 minutes to a few hours on a basic setup.

Save checkpoints to avoid losing progress.

Step 6: Save and Deploy

After training, save the model to Hugging Face or locally.

Test it with new inputs to verify.

For deployment, use inference APIs or host on platforms like Streamlit for apps.

This fine tune llm small dataset tutorial keeps things practical—adjust as needed for your setup.

Evaluating the Fine-Tuned Model

Once trained, check how well it works. Evaluation prevents deploying a faulty model.

Use metrics like perplexity for generation tasks or accuracy for classification.

Compare before and after fine-tuning on a holdout set.

Qualitative checks: Generate samples and review manually.

Tools like Hugging Face’s evaluate library automate this.

If results are off, iterate—maybe add more data or tweak parameters.

Common Challenges and Solutions

Fine-tuning isn’t always smooth. Here are frequent issues.

Overfitting: With small data, the model memorizes instead of learning. Solution: Use regularization like dropout or early stopping.

Underfitting: If no improvement, try a larger base model or more epochs.

Resource limits: For cheap fine tuning, use quantized models (8-bit) to reduce memory use.

Data quality: If outputs are bad, revisit cleaning.

Bias: Small datasets can amplify biases. Check for fairness and diversify samples.

Debugging: Log errors and use simple tests.

Addressing these keeps your project on track.

Real-World Examples

To make this concrete, consider a case where a small e-commerce site fine-tunes a model for product descriptions.

They collect 300 examples from past listings, clean them, and use Llama 2.

After fine-tuning, descriptions match their brand voice, boosting sales.

Another example: A researcher fine tunes GPT for niche data in botany. With 500 plant descriptions, the model answers queries accurately.

These show practical wins from small-scale fine-tuning.

For more Interesting and informational Blogs Please visit our website Lidarmos

Conclusion

Fine-tuning LLMs on small datasets opens doors for custom AI without big hurdles. From prep to evaluation, the steps are manageable. Use this as your cheap fine tuning guide to experiment.

Remember, start small, test often, and scale as needed. With practice, you’ll create models tailored to your needs.

If you try this fine tune llm small dataset tutorial, share your results. Happy tuning!